This will return a unique UploadId that we must reference in uploading individual parts in the next steps. This also means that a full large file need not be present in memory of the nodeJs process at any time thus reducing our memory footprint and avoiding out of memory errors when dealing with very large files.įirst, we will signal to S3 that we are beginning a new multipart upload by calling createMultipartUpload. The goal is that the full file need not be uploaded as a single request thus adding fault tolerance and potential speedup due to multiple parallel uploads. Let’s set up a basic nodeJs project and use these functions to upload a large file to S3. completeMultipartUpload – This signals to S3 that all parts have been uploaded and it can combine the parts into one file.uploadPart – This uploads the individual parts of the file.

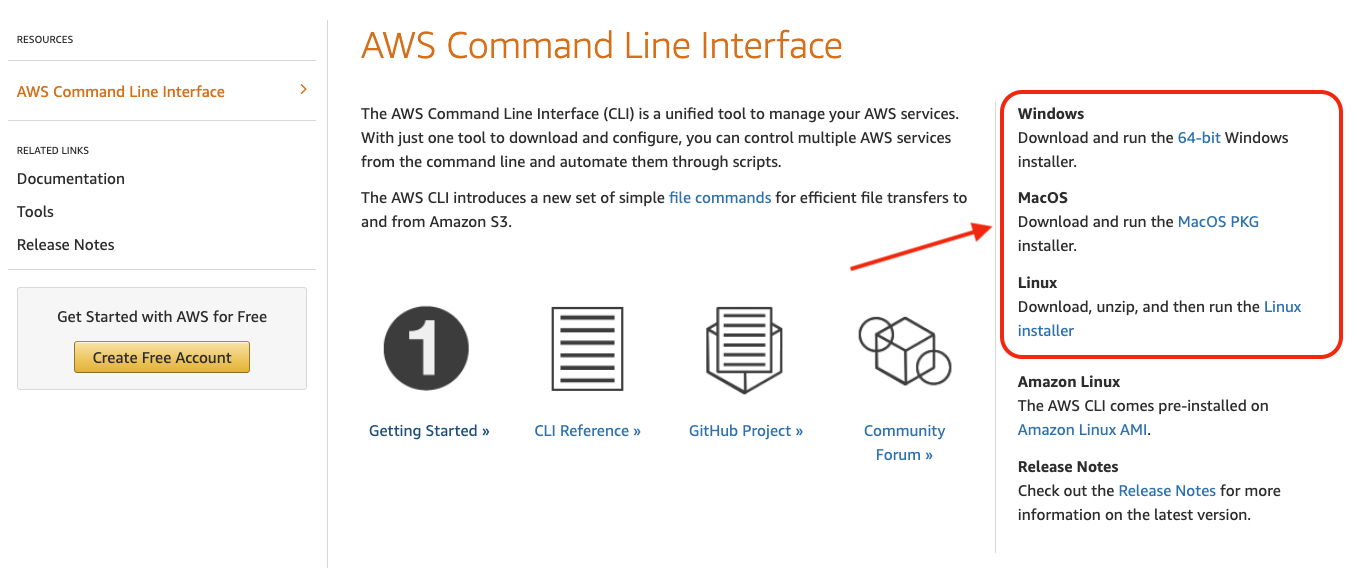

createMultipartUpload – This starts the upload process by generating a unique UploadId.We’re going to cover uploading a large file using the AWS JS SDK.Īll Multipart Uploads must use 3 main core API’s: A good way to test uploading to various solutions like this is with curl upload file capabilities. If a single upload fails, it can be restarted again and we save on bandwidth. The individual part uploads can even be done in parallel. Individual pieces are then stitched together by S3 after all parts have been uploaded. Example: AWS S3 Adapter for Assets client : newly created service assetss3 bucket : create a new bucket on S3 Management console and use the bucket name. It lets us upload a larger file to S3 in smaller, more manageable chunks. Multipart Upload is a nifty feature introduced by AWS S3. You can not create folders inside bucket, but a logical folder using ‘/’ slash can be created.This is a tutorial on Amazon S3 Multipart Uploads with Javascript. You can create a bucket where the files will be uploaded. This will be used to login to S3 Cloud from Salesforce.Īfter logging in to AWS, you can go to console screen and click on S3 under Storage & Content Delivery section. After create your AWS (Amazon Web Service) user account, login secret and key ID will be shared with you by Amazon. As a result, I have to store file temporarily on disk before uploading to S3. Consequently, after parsing file upload (HTTP POST) request, my application must store the file in S3. File Size 1-10 MB However, the client interface has to be a REST API provided by my application. REST protocol is used in this scenario.įiles will be uploaded securely from Salesforce to Amazon server. I want to store files uploaded to my app (by clients) to Amazon S3. User can be given option to upload files to Amazon S3 via Salesforce and access them using the uploaded URLs. So, sometimes organisations decide to use external storage service like Amazon S3 cloud. When we have to upload multiple files or attach many files to any record, Salesforce provides storage limit per user license purchased.

0 kommentar(er)

0 kommentar(er)